|

| Wrong! |

The raw data file ended up being about 12.5GB, or just over 2M tweets, so it still fits into the 'medium data' category (i.e. a number-crunching server that is 4 years old can comfortably fit it in memory). I was hoping to use tools like Spark, and maybe I will try to convert my code into that, but there was no need. You can check out (!) my code here.

|

| Guess which one is which. |

What I thought was quite smart is the way Healey et al. average sentiment. The database of sentiment mentioned above contains a mean and standard deviation of valence (positive/negative affect), estimated from many measurements. They take both of these and then compute the normal distribution pdf for the valence being exactly the mean, giving a measure of certainty about valence for each word. This is then used as a weight in averaging the sentiment for the whole tweet.

I only analysed sentiment for tweets with >= 2 words in the database, which excluded ~200k tweets (10%) from analysis.

We can see that:

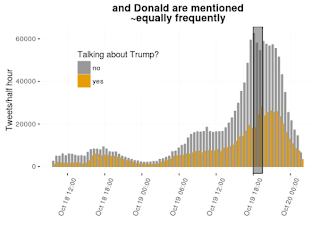

Both of candidates are mentioned about equally frequently during the debate (grey highlight on the right), though Donald has a few more mentions in the night (twitter wars?)

The spike in mentions of candidates seems to be during the debate, though peak mention of both at the same time is a few hours after (comparisons?).

The sentiment of tweets which mention candidates is lower (more 'angry'/'bad'/'racist') than in tweets not mentioning them (note: line is smooth curve fit from ggplot2, using Generalized Additive Model):

Not sure if the rise in sentiment for tweets mentioning Trump is a blip, or maybe it's because he lied only a tiny bit that day?

However it seems that the more people mention any candidate, the worse they feel about them. Even more so if they mention more candidates:

But hey, I can see some hombres on the horizon!

And they are bad! Tremendously bad!